Category: SharePoint 2010

SP2010 “Cannot access local farm” issue

An error I’ve frequently encountered is the “Cannot access local farm” issue. This can come up when attempting to run a PowerShell script or STSADM command, and looks something like this:

Get-SPWeb : Cannot access the local farm. Verify that the local farm is properly configured, currently available, and that you have the appropriate permission to access the database before trying again.

To work around this, run the following script in the SharePoint Management Shell, as a farm administrator.

Get-SPDatabase | Add-SPShellAdmin domain\username

This will grant the user access to both the configuration database and the content database.

To reverse the above command:

Get-SPDatabase | Remove-SPShellAdmin domain\username

SP2010 Search Primer

Find the Search Administration Console (from central admin)

- Select service applications

- Select the search service application.

Find and set Crawl Rules

- Crawl rules are located here

- Crawl Rules can be used to Include or Exclude. In the following example, there are three rules, used as filters (to exclude):

Force an incremental or full crawl

- This can be a bit tricky to find. Select Crawl Sources

- There should be only one content source present, unless you have set up others. Click the dropdown next to it – you’ll see options to crawl (which will be grayed out if a crawl is presently running):

SharePoint File Downloader

At Synteractive, our SharePoint deployments often have both custom functionality AND a nice UI. So (in our most interesting projects) we’ll have designers working with developers. As a result, we used to end up with one of the following situations:

- Developers’ deployed WSP’s overwrite changes in asp, page layouts or master pages.

- Someone wants to back up all content or styles so it can be used in another project.

- People tiptoeing VERY CAREFULLY around each other’s code, so as not to overwrite it – but no process in place to prevent that.

- Some unlucky person gets to copy each file, one by one, from one environment to another. Ugh.

I started wishing that SOMEONE had created a tool to pull down our customized SharePoint content (e.g. pages, master pages, xsl, css, js) en masse, so that I could reference it later, check it into Subversion or integrate it into a WSP for later deployment to a Production environment.

Introducing SPFileDownloader

Anyone who works with me knows that I love to automate things, and of course I wanted to save time. So I created a small program which uses SharePoint Web Services to download the contents of lists or folders specified by the user. It’s a command line utility, but the way it’s written, it could be retooled as Powershell, Windows Forms or whatever.

Since this is a command line utility, various arguments are necessary to tell it where to get the files, authentication information, etc. If you invoke the program without arguments, you get the following help info:

Usage:

SPFileDownloader <web url> <list | list/folder/subfolder> <target dir local> <username> <password> [switches]

switches:

f Use form-based authentication

r Recursive - recurse through subfolders

o Overwrite - overwrite local files

p Pause - pause for user input after run

So a typical usage might look something like this:

> SPFileDownloader http://mysite "Style Library" c:\dev\test DOMAIN\username mypassword po

Once you get past this, the usage is self-explanatory. It can handle use form or basic authentication. You can recurse through directories (particularly useful if you want to back up the entire Style Library).

All source code is included here. A couple of disclaimers: This code is provided as-is! I won’t support it, though please leave comments if this has been useful to you, or if you have suggestions. Finally, this code is, um, a bit sloppy. It started out mostly as snippets compiled from elsewhere. I’ve spent some time refactoring it, but it doesn’t pay to be perfectionistic here. It works.

Enjoy!

Place the user name anywhere

Everyone loves to see their name on a web page. It’s verification that the user is logged in properly, and perhaps validation of some sort? Well without getting into the psychology of it, I’d like to share a couple of approaches that I’ve taken to further personalize custom SharePoint pages.

The other day I was asked for a “quick script” to display the user’s login name elsewhere on the page. This assumes that you have not removed the login link located at the top right of the page. For this, you need to be using jquery. Thanks to Matt Huber’s blog for this idea, which I’ve taken a step further.

$(document).ready(function(){

var name = $(".s4-trc-container-menu span a span").text();

$("#myname").text(name);

});

And place this html wherever you want the name to appear.

<span id="myname"></span>

Similarly, here’s a slightly more sophisticated way to create a custom login link, complete with user name. This is a c# based approach, so the following code can go in the code-behind or inline code – perhaps in the override of OnLoad.

if (HttpContext.Current.User.Identity.IsAuthenticated)

{

litName.Visible = true;

//a bit of calisthenics to remove the domain.

string[] displayNameComponents = SPContext.Current.Web.CurrentUser.Name.Split(new char[] { '\\' });

litName.Text = displayNameComponents[displayNameComponents.Length - 1];

}

else

{

lnkSignIn.Visible = true;

}

Here’s the placeholder for the asp page:

<asp:Literal Visible="false" ID="litName" runat="server"/> <asp:Hyperlink Visible="false" NavigateUrl="/_layouts/Login.aspx" ID="lnkSignIn" runat="server">Sign In</asp:Hyperlink> <asp:Hyperlink Visible="true" onclick="STSNavigate2(event,'/_layouts/SignOut.aspx')" ID="lnkSignOut" runat="server">Sign Out</asp:Hyperlink>

That’s all, folks!

SharePoint Drag/Drop file upload using the ASP.NET AJAX Control Toolkit

In my current work on a custom interface for a document management system, I was challenged to create a drag/drop interface for adding files to SharePoint.

I investigated a number of alternatives. Initially these were my considerations:

- Not all browsers (most notably IE9) do not support HTML5, which natively supports drag/drop.

- Even if HTML5 is supported, there’s still quite a bit of wiring to do in Javascript.

- As developers, we try not to recreate the wheel. If a problem has already been solved, it’s usually a better alternative to use that – at least as a starting point.

As it turns out, there’s no shortage of drag/drop solutions out there. But try one that applies to SharePoint, provides compatibility with non HTML5 browsers, shows a progress bar, etc. In the end I decided to try the ASP.NET AJAX Control Toolkit.

Getting started

On the surface, installation is straightforward for anyone who is familiar with ASP.NET development. Add an entry to the web.config, drop the appropriate DLL in the GAC, and that’s pretty much it. However, (ahem) we’re using SharePoint here, which brings up a couple of issues that we’ll need to address. Fortunately I’ve already hammered out these issues, so you don’t have to.

Issue 1: Version

We need to use the .NET 3.5 version. There are two compiled versions. We are again reminded that SharePoint 2010 doesn’t use the latest version of .NET.

Issue 2: ScriptManager

The ScriptManager packaged with the Toolkit needs to be used, INSTEAD of the standard ASP ScriptManager which is on all master pages in SharePoint.

before:

<asp:ScriptManager id="ScriptManager" runat="server" …." />

after:

<%@ Register Assembly="AjaxControlToolkit, Version=3.5.60501.0, Culture=neutral, PublicKeyToken=28f01b0e84b6d53e" Namespace="AjaxControlToolkit" TagPrefix="ajaxToolkit" %> <ajaxToolkit:ToolkitScriptManager runat="server" …. />

Issue 3: File Uploader is not ready for prime time

The drag/drop control is called AjaxFileUpload (not to be confused with AsyncFileUpload, which is also part of the toolkit). Once you have the ScriptManager issue handles, you’ll be able to get things working using the simple demo. However, I found (as of the 5/1/12 release) that there were a couple of bugs.

1. The control assumes that no parameters are present on the page which contains the control. This is documented in this bug report. I won’t go into detail on this. Suffice it to say that it should be fixed in the future. The impact is that the control cannot be used on most SharePoint pages including dialogs invoked using SP.UI.ModalDialog.showModalDialog() – which appends the parameter IsDlg=1 even if you don’t explicitly do so!

2. a number of features of the drag/drop uploader do not work. For example, exceptions generated when the code is downloading are not “passed through” to the javascript event which should be fired in the event of an error. In fact, when I delved into the code, I found that the OnClientError event is never fired!

The way I fixed this issue was to download the AjaxToolkit code and “just fix it”. I spent a few hours learning how the code works, and modified the code itself, in C# and Javascript. For anyone interested in following this path, you can download the changed code here (I only included the files I changed). When you download the code, make sure to use the version targeted to .NET 3.5. It’s a VS 2008 project, but of course it’s ok for you to convert it to VS 2010.

Finally: Hooking it up to SharePoint

The file uploader control is very clever. It posts back to the same page it’s located on (this is the ajax part) and calls a code block that you can define using C# in your ASP or Codebehind. Mine looks something like this:

protected void ajaxUpload1_OnUploadComplete(object sender, AjaxControlToolkit.AjaxFileUploadEventArgs e)

{

//you’ll need some logic in here to determine where to put the files.

string filename = string.Format("{0}/{1}", SPContext.Current.Site.Url, e.FileName);

using (SPWeb web = new SPSite(uploadTarget).OpenWeb())

{

web.AllowUnsafeUpdates = true;

web.Files.Add(filename, e.GetContents(), true);

web.AllowUnsafeUpdates = false;

}

}

From the client interface end of things, you’ll also need to put in code to provide feedback. There’s a good The code located here. provides a pretty good example of that; here’s another one:

//hook this up to the OnClientUploadError property of the control

function onError(sender, e) {

//this bit of code adds an error message to a designated “errors” area.

var test = document.getElementById("uploadErrors");

test.style.display = 'block';

var fileList = document.getElementById("fileList");

var item = document.createElement('div');

item.style.padding = '4px';

item.appendChild(createFileInfo(e));

fileList.appendChild(item);

}

function createFileInfo(e) {

var holder = document.createElement('div');

holder.appendChild(document.createTextNode(e.get_fileName() + ': ' + e.get_errorMessage()));

return holder;

}

//hook this up to the OnClientUploadComplete property of the control

function onClientUploadComplete(sender, e) {

alert("uploaded");

}

Using this method, errors are returned to the web user. Once our designers get through with it, it’s going to be REALLY slick!

Implementing custom folder statistics in SharePoint 2010 using c#

In one scenario this past week, I needed to display the total number of files and folders within any folder. These numbers needed to be recursive – so if a document is three folders deep within the current folder, that still counts. Same with folders. I also needed to show a date representing the modified date of the MOST RECENT item or folder, again recursive to the last level.

I found a way to achieve this using a single CAML query and some fancy XSL parsing!

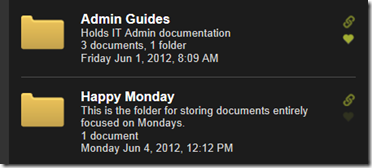

The folder and document counts would be displayed like this:

I’m already using other mechanisms to get lists of folders for display. The XSL-driven styling shown is probably the topic of a future post. The challenge was, how to get those counts and dates…

I started with an SPQuery. The following recursively query pulls the basic information required, within the folder specified.

SPQuery query = new SPQuery(); query.Folder = folder; query.Query = string.Empty; query.RowLimit = uint.MaxValue; query.ViewFields = "<FieldRef Name='Modified' Nullable='True'/><FieldRef Name='ContentTypeId' Nullable='True'/>"; query.ViewFieldsOnly = true; query.ViewAttributes = "Scope='RecursiveAll'"; SPListItemCollection items = parentList.GetItems(query); string itemsXml = items.Xml;

The trick here is the ViewAttributes property. There are a few different ways to run an SPQuery, and this was the Scope that gave me the results I needed. Note that an SPListItemCollection may be accessed as API objects OR XSL, and I chose to pull out my results using XSL.

Our query result is something like this (if you look closely, you’ll see that we have two folders and two documents here):

<rs:data ItemCount="4"> <z:row ows_Modified="2012-05-31 12:34:01" ows_ContentTypeId="0x01200082B0C5829FE047A1BF58F68DA1DAB12500C7BCD99F82ACA340A0131D59CE62371B" ows__ModerationStatus="0" ows__Level="1" ows_ID="1" ows_Created_x0020_Date="1;#2012-05-31 12:34:01" ows_PermMask="0x7fffffffffffffff" ows_FileRef="1;#depts/it/Lists/CTADocuments/mygroup" /> <z:row ows_Modified="2012-05-31 12:36:51" ows_ContentTypeId="0x01200082B0C5829FE047A1BF58F68DA1DAB12500C7BCD99F82ACA340A0131D59CE62371B" ows__ModerationStatus="0" ows__Level="1" ows_ID="2" ows_Created_x0020_Date="2;#2012-05-31 12:36:51" ows_PermMask="0x7fffffffffffffff" ows_FileRef="2;#depts/it/Lists/CTADocuments/mygroup/another one" /> <z:row ows_Modified="2012-05-31 17:49:45" ows_ContentTypeId="0x010100EB5D8789C471B04E80E2A7481607C23B" ows__ModerationStatus="0" ows__Level="1" ows_ID="9" ows_Created_x0020_Date="9;#2012-05-31 17:49:45" ows_PermMask="0x7fffffffffffffff" ows_FileRef="9;#depts/it/Lists/CTADocuments/Just_the_Essentials_Publishing.master" /> <z:row ows_Modified="2012-06-07 17:05:11" ows_ContentTypeId="0x010100EB5D8789C471B04E80E2A7481607C23B" ows__ModerationStatus="0" ows__Level="1" ows_ID="10" ows_Created_x0020_Date="10;#2012-06-07 17:05:11" ows_PermMask="0x7fffffffffffffff" ows_FileRef="10;#depts/it/Lists/CTADocuments/mygroup/another one/junk.txt" /> </rs:data>

In this case it was more straight-forward to take the raw XSL and get what I needed using lambda expressions. For example, to get document counts I applied an understanding of how content type id’s work. All document content type id’s which inherit from Document (with id 0x0101), start with that number:

const string docCtID = "0x0101";

const string ctypeIDElement = "ows_ContentTypeId";

documentCount = rowElements

.Where(dt => dt.Attribute(ctypeIDElement).Value.StartsWith(docCtID))

.Count();

Putting it all together

Finally, we can wrap all of this logic into a single, fairly dense method. And achieve our goal using only one query!

const string docCtID = "0x0101";

const string folderCtID = "0x0120";

const string ctypeIDElement = "ows_ContentTypeId";

const string modIDElement = "ows_Modified";

internal static void GetFolderStats(this SPFolder folder, SPList parentList, out string modDate, out int documentCount, out int folderCount)

{

SPQuery query = new SPQuery();

query.Folder = folder;

query.Query = string.Empty;

query.RowLimit = uint.MaxValue;

query.ViewFields = "<FieldRef Name='Modified' Nullable='True'/><FieldRef Name='ContentTypeId' Nullable='True'/>";

query.ViewFieldsOnly = true;

query.ViewAttributes = "Scope='RecursiveAll'";

SPListItemCollection items = parentList.GetItems(query);

//get query results and read them as xml.

XDocument doc;

using (TextReader tr = new StringReader(items.Xml))

{

doc = XDocument.Load(tr);

}

var rowElements = doc.Root.Element(XName.Get("data", "urn:schemas-microsoft-com:rowset")).Elements();

documentCount = rowElements

.Where(dt => dt.Attribute(ctypeIDElement).Value.StartsWith(docCtID))

.Count();

folderCount = rowElements

.Where(dt => dt.Attribute(ctypeIDElement).Value.StartsWith(folderCtID))

.Count();

if (items.Count == 0)

{

modDate = folder.Item.Value(MODIFIED);

}

else

{

var dtAttList = rowElements

.Attributes()

.Where(dt => dt.Name == modIDElement)

.ToList();

dtAttList.Sort((a, b) => string.Compare(b.Value, a.Value)); //sort on values, most recent at top

modDate = dtAttList[0].Value;

}

}

Typical Web.config Tweaks

These are some web.config modifications that I typically need at my fingertips. After all, who can memorize all this stuff?

Allow scripts to be embedded in asp pages.

<PageParserPaths>

<PageParserPath VirtualPath="/*" CompilationMode="Always" AllowServerSideScript="true" IncludeSubFolders="true" />

</PageParserPaths>

Allow asp scripts to access external libraries

In the following case, SharePoint Taxonomy libraries:

<add assembly="Microsoft.SharePoint.Taxonomy, Version=14.0.0.0, Culture=neutral, PublicKeyToken=71e9bce111e9429c" />

Create a reference to custom web service

Each method in the web service becomes a service “method”.

<location path="_layouts/SPUtilities/SPservice.asmx">

<system.web>

<webServices>

<protocols>

<add name="HttpPost" />

</protocols>

</webServices>

</system.web>

</location>

Error reporting

Show a detailed error (rather than the generic sharepoint error).

Before:

<SafeMode MaxControls="200" CallStack="false" DirectFileDependencies="10" TotalFileDependencies="50" AllowPageLevelTrace="false">

After:

<SafeMode MaxControls="200" CallStack="true" DirectFileDependencies="10" TotalFileDependencies="50" AllowPageLevelTrace="true">

Before:

<customErrors mode="On" />

After:

<customErrors mode="Off" />

Implementing Favorites in SharePoint 2010

As a SharePoint consultant, I’m often asked to provide features which “should” exist, but Microsoft just doesn’t provide. Often these are features which might be common in the Web 2.0 world, but – well, not here.

In one of my current projects (based on a Publishing Site), the paradigm of a “favorite button” is central to the desired site functionality. Users should be able to “favorite” any document or folder. These documents and folders are visible in a centrally located list, along with metadata items such as Modified Date and Author.

There are many possible ways to implement this, but initially I chose to use SharePoint 2010’s Social Tagging feature as the basic building block. It’s already set up to store link information by user, and integrates with the Keywords area of the Term Store. On the back end, this is the same mechanism used when you click “I like it” on any page.

This article is more concerned with the back end of things – the code listed below can be wrapped in web services, web parts or whatever you like, to provide functionality to the end user.

Tagging items

Social Tags are essentially key/value pairs, with the key being a Uri (e.g. the url of a web page), and the value being a description. In addition to the tag of “I like it”, other tags may be created in the Keywords area of the Term Store, and then used to tag documents. Here’s the code I’m using to tag a document:

/// <summary>

/// Updates or adds a social tag for this user.

/// User info is derived from context.

/// Tag is added to term store if necessary.

/// </summary>

public static void UpdateSocialTag(SPSite site, string socialTagName, string title, string url)

{

SPServiceContext context = SPServiceContext.GetContext(site);

SocialTagManager mySocialTagManager = new SocialTagManager(context);

//Retrieve the taxonomy session from the SocialTagManager.

TaxonomySession taxSession = mySocialTagManager.TaxonomySession;

TermStore termStore = taxSession.DefaultKeywordsTermStore;

Term newTerm = termStore.FindOrCreateKeyword(socialTagName);

Uri tagUri = ConvertToUri(site, url);

mySocialTagManager.AddTag(tagUri, newTerm, title);

}

/// <summary>

/// Create a uri from this url.

/// If exception, (e.g. url is relative), use site url as base and try again.

/// </summary>

public Uri ConvertToUri(SPSite site, string url)

{

Uri tagUri;

if (Uri.IsWellFormedUriString(url, UriKind.Absolute))

{

tagUri = new Uri(url);

}

else

{

//try again by prepending the site uri

if (!url.StartsWith("/")) url = "/" + url;

tagUri = new Uri(site.Url + url);

}

return tagUri;

}

The reason this method is called “Update” is that if this Uri has already been tagged, that tag will be replaced.

The FindOrCreateKeyword extension is defined here:

public static class KeywordExtensions

{

//Find this keyword in the term store. If it doesn't exist, create it.

public static Term FindOrCreateKeyword(this TermStore termStore, string socialTagName)

{

Term term = FindKeyword(termStore, socialTagName);

if (term == null)

{

term = termStore.KeywordsTermSet.CreateTerm(socialTagName, termStore.DefaultLanguage);

termStore.CommitAll();

}

return term;

}

public static Term FindKeyword(this TermStore termStore, string socialTagName)

{

Term term = termStore.KeywordsTermSet.Terms.FirstOrDefault(t => string.Compare(t.Name, socialTagName, true) == 0);

return term;

}

public static Term KeywordItems(this TermStore termStore, string socialTagName)

{

Term term = termStore.KeywordsTermSet.Terms.FirstOrDefault(t => string.Compare(t.Name, socialTagName, true) == 0);

return term;

}

}

Retrieving a list of favorites

I’ll also want to display my list of favorites. Social tagging does not intrinsically lend itself to pulling out the list of items which have a particular tag, but we can add that capability using the following code:

/// <summary>

/// Get the items tagged with TermName for this user.

/// If empty, return an empty array

/// </summary>

internal static SocialTag[] GetUserSocialTags(SPSite site, string termName)

{

List<SocialTag> socialTags = new List<SocialTag>();

SPServiceContext serviceContext = SPServiceContext.GetContext(site);

UserProfileManager mngr = new UserProfileManager(serviceContext); // load the UserProfileManager

UserProfile currentProfile = mngr.GetUserProfile(false);// Get the user’s profile

if (currentProfile == null) return socialTags.ToArray(); // user must have profile

SocialTagManager smngr = new SocialTagManager(serviceContext);

// Get the SocialTerm corresponding to this term.

SocialTerm favTerm = GetSocialTerm(termName, currentProfile, smngr);

if (favTerm == null) return socialTags.ToArray();

// Get the terms for the user. Loop through them for conformity.

SocialTag[] tags = smngr.GetTags(currentProfile);

foreach (SocialTag tag in tags)

if (tag.Term == favTerm.Term)

socialTags.Add(tag);

return socialTags.ToArray();

}

/// <summary>

/// retrieve a named social term.

/// </summary>

private static SocialTerm GetSocialTerm(string tag, UserProfile currentProfile, SocialTagManager smngr)

{

// Get the terms for the user

SocialTerm[] terms = smngr.GetTerms(currentProfile);

SocialTerm favTerm = null;

//Iterate through the terms and search for the passed tag

foreach (SocialTerm t in terms)

{

if (string.Compare(t.Term.Name, tag, true) == 0)

{

favTerm = t;

break;

}

}

return favTerm;

}

This code forms the “core” of my favorites system. The ability to tag a document (or remove tag) is wrapped in a web service to allow us to provide provide AJAX functionality. I wrote a web part to display the favorites, with simple sorting and filtering.

Metadata features

One missing element is the metadata, a part of the requirement I mentioned above. For this, I wrote code (as part of my Favorites Web Part) to pull out the necessary metadata for each Uri given, provided it’s a reference to the current site.

Building a SharePoint 2010 WSP Using TeamCity

I’ve been using Continuous Integration for years. It’s been a part of almost every project I’ve worked on. Prior to a few months ago, I was a CruiseControl.NET devotee, but recently a few of my colleagues expressed a preference for TeamCity. I tried it, and now I’m a convert!

However, the Microsoft stack is not set up with CI in mind. You’ll need to use a few tricks to set up your build on a computer which does not have a full dev environment. This is particularly true when developing for SharePoint 2010 using Visual Studio 10.

Using TeamCity

Setting up TeamCity is pretty straightforward. It’s a free product (up to 20 builds), and can be downloaded on the JetBrains web site. I won’t go into the basics of TeamCity right now. However, once the product is installed, start by setting up a simple project with a version control settings, but no build steps – it simply downloads the code from your repository.

Preparing to use MSBuild

There are quite a few dependencies to satisfy, for a SharePoint build. You’re using .NET 3.5, but you’ll want to make sure to use MSBuild 4.0 if you’ve been using VS2010. Features like WSP creation are not available in earlier versions.

Setting up dependencies

I used to do this step though trial and error, but recently found a great resource for this: http://msdn.microsoft.com/en-us/library/ff622991.aspx. Follow the instructions in step 1. (Prepare the Build System). Obviously we’re not using TFS here, so skip that step. This step outlines the dependencies, and instructs us on which dll’s we need to manually add to the GAC. There’s a whole list. The short explanation is that you copy the dll’s from your dev environment, and place them into the GAC the build server.

One “gotcha” here is that that you need to make sure you’re using the correct version of gacutil.exe when adding items to the GAC. The reason is that .NET 4.0 adds a second GAC(!), so if you get the following message, you’ll know you have this problem:

Failure adding assembly to the cache: This assembly is built by a runtime newer than the currently loaded runtime and cannot be loaded.

On my machine, the path of the proper version of gacutil.exe was located in

C:\Program Files\Microsoft SDKs\Windows\v7.1\Bin\NETFX 4.0 Tools\gacutil.exe

Make sure you are working from the most recent version of the Microsoft Windows SDK. The following page should take you there: http://msdn.microsoft.com/en-us/windows/bb980924.aspx

Remember, YMMV here. If you do not have SharePoint installed on your build machine, you’ll have to add any SharePoint dll’s necessary to build your project as well. Anything that you miss will be readily apparent in the next step…

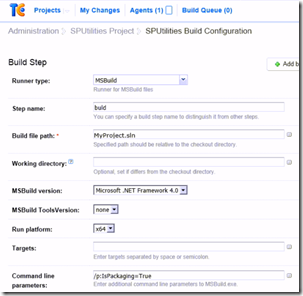

Adding your MSBuild step to TeamCity

Go back to the project you set up, and add a Build step. Select MSBuild from the Dropdown. Your project should look similar to this:

Notice the Command Line Parameters setting. IsPackaging=True causes the build to actually generate WSP’s for any of your projects that are configured to do so. Without this parameter, a simple build will be performed.

Hope you enjoyed my first post! Let me know how it goes.